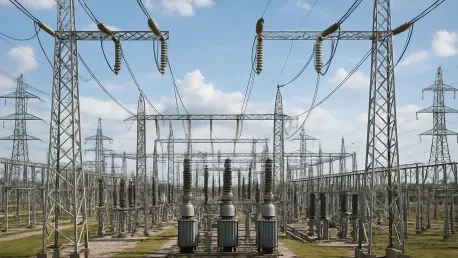

With extensive experience in energy management and electricity delivery, Christopher Hailstone is our go-to expert on the future of grid reliability and security. Today, he joins us to discuss one of the most transformative—and paradoxical—forces shaping the energy landscape: artificial intelligence. We will explore how AI, despite being a massive new source of energy demand, also holds the key to creating a more efficient, predictive, and resilient electric grid. Our conversation will cover the evolution of AI from an advisory tool to an autonomous operator, the critical role of data accuracy in creating “digital twins” of the grid, the shift from reactive to proactive maintenance, and the rise of virtual power plants in balancing our increasingly complex energy ecosystem.

We’re seeing a paradox where AI is both a massive new source of energy demand and a potential solution for grid management. How can AI-driven efficiencies in grid operations realistically offset the immense power needs of data centers? Please share specific metrics or examples.

That’s the central challenge we’re facing, and it’s a fascinating one. The numbers are staggering—we’re looking at AI contributing to roughly 3-6% of total energy demand by 2030, with a compound annual growth rate of 40% starting next year. You can almost feel the strain on the system just thinking about it. But this is where the other side of the AI coin comes into play. While it’s a voracious consumer of energy, it’s also an incredibly powerful tool for optimization. The offset isn’t just a one-to-one exchange. It comes from AI’s ability to reduce overhead operational costs, manage outages with surgical precision, and, most importantly, prevent a significant number of them from ever happening. When you layer in hardware advancements like more energy-efficient GPUs and smarter software techniques like model pruning, you start to see a holistic picture where AI’s positive impact truly begins to counterbalance its own footprint. It’s about turning a static, reactive system into one that is agile and proactive, and that fundamental shift is where the real savings and efficiencies are unlocked.

You describe a future where AI acts as a grid “autopilot,” evolving from an investigator to an autonomous operator. What are the most significant technical and human-trust hurdles in moving from an advisory role to autonomous execution, and what steps ensure a safe transition?

The journey from an advisory role to full autonomy is paved with caution, and the biggest hurdle is undoubtedly human trust. For a century, human operators have been making high-stakes decisions with incomplete data, a tremendously stressful responsibility. The idea of handing the keys over to an AI is daunting. The transition has to be gradual, building confidence at each stage. We start with “AI-as-Investigator,” which simply saves operators crucial time by surfacing the right information instantly. Then we move to “AI-as-Advisor,” where the system learns from historical events and makes informed recommendations. This is where operators can see the logic, validate the suggestions, and build a working relationship with the technology. The final step, “AI-as-Operator,” where the system takes autonomous action, can only happen after a deep, proven trust is established. Technically, this requires a robust architecture with a smart modern context protocol for agent orchestration and resilient APIs to ensure the commands are executed flawlessly. The transition is less of a switch and more of a slow, deliberate handover, ensuring that at every point, safety and reliability are paramount and human oversight is maintained until the system proves itself beyond any doubt.

The reliability of a grid’s “digital twin” is critical for management systems. How can technologies like Graph Neural Networks move beyond simply modeling the grid to actively correcting data errors in real time? Could you walk through a scenario where this prevents a cascading failure?

The concept of a “digital twin” is the very heart of a modern grid management system, and its accuracy is non-negotiable. If the twin is flawed, every decision based on it is at risk. This is where Graph Neural Networks, or GNNs, are a game-changer. They don’t just build a static model; they create a dynamic, geometric representation of the grid where the physical relationships between components are constantly being validated. Imagine a scenario where a sensor at a key substation malfunctions and starts reporting “zero current.” In a traditional system, this might go unnoticed until it causes a problem, or it would require an operator to manually investigate a confusing alert. A GNN, however, sees the grid as an interconnected network. It would instantly recognize that adjacent nodes are reporting active power flow and that, based on the laws of physics, a zero-current reading at that specific location is an anomaly. Instead of just flagging it, the AI can go a step further. It could autonomously correct the data point based on the validated readings from its neighbors or escalate a high-confidence alert to an operator, pinpointing the exact faulty sensor. This prevents the erroneous data from propagating into other critical systems, like outage management, and causing a misinformed, potentially dangerous operational decision that could otherwise lead to a localized overload and a cascading outage.

Vegetation-related issues account for a significant percentage of power outages. Beyond simply analyzing drone footage for existing problems, how can AI create a truly proactive maintenance schedule? What operational changes are required for utilities to shift from reactive clearing to predictive management?

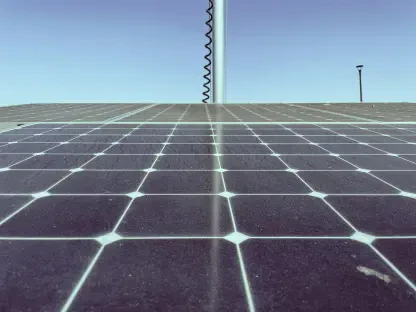

Vegetation management is a classic example of where we’ve been stuck in a reactive cycle for decades, and it’s a costly one, responsible for over 20% of power outages in the U.S. Simply using drones to spot existing encroachments is an improvement, but it’s still reactive. The real leap forward is using AI to move into a truly predictive paradigm. This involves fusing multiple data sources. We can train geospatial AI models on LiDAR scans and satellite multispectral data to not just see where a tree is today, but to predict its growth trajectory over the next season. The model can factor in species type, soil moisture, and local weather patterns to forecast which specific areas will become high-risk zones. This allows utilities to create optimized trimming schedules that are proactive, not reactive. Operationally, this requires a significant shift. It means moving away from broad, cyclical clearing schedules to a data-driven, surgical approach. It requires trusting the AI’s predictions to prioritize work, dispatching crews to areas that aren’t problems yet but are forecasted to become so. It’s about preventing the branch from ever falling, which can reduce vegetation-related damage by as much as 63%. It’s a fundamental change in mindset from “cleaning up” to “staying ahead.”

The concept of Virtual Power Plants (VPPs) relies heavily on accurate forecasting of customer-owned assets. What are the key AI models and data inputs needed to make these VPPs reliable enough to serve critical loads like data centers during peak demand?

Virtual Power Plants are one of the most exciting frontiers for grid flexibility, but their reliability hinges entirely on the accuracy of their forecasts. To make a VPP robust enough to support a critical load like a data center, you need an AI framework that goes far beyond simple historical analysis. The key is to ingest a rich, multivariate stream of inputs: real-time weather patterns, hyper-local economic indicators, historical load profiles, and even social event calendars. Emerging transformer-based models, which have proven so powerful in natural language, are being adapted for time-series data. Their strength is a self-attention mechanism that can weigh the importance of different inputs and capture complex, long-range dependencies. For example, the model can learn that a certain combination of a forecasted heatwave, a weekday afternoon, and a local sporting event will create a very specific pattern of demand and solar generation from rooftop panels. By mastering this complex interplay, AI can produce highly accurate flexibility forecasts, allowing utilities to confidently orchestrate thousands of customer-owned devices—like smart thermostats, EV chargers, and battery storage—into a cohesive, dispatchable resource that can reliably reduce stress on the grid or provide power during peak usage.

What is your forecast for the electric grid over the next decade as AI integration becomes more widespread?

My forecast is one of profound transformation, but it won’t be an overnight revolution. Over the next decade, I see the electric grid evolving from a system of rigid, siloed infrastructure into an intelligent, integrated, and adaptive ecosystem. AI will act as the central nervous system. We will see predictive maintenance become standard practice, dramatically reducing unplanned outages and extending the life of our existing assets. Grid operators will be augmented by AI advisors, allowing them to make faster, more informed decisions under pressure, enhancing both safety and reliability. The most significant shift, however, will be on the demand side. The proliferation of VPPs, powered by precise AI forecasting, will unlock an unprecedented level of flexibility, turning millions of homes and businesses into active grid participants. This won’t eliminate the need for deep human expertise—far from it. Instead, it will elevate the role of grid engineers and operators from manual controllers to strategic orchestrators of an incredibly complex, AI-driven system. The grid of the future will be defined by its ability to anticipate, adapt, and optimize, and AI is the indispensable tool that will make that future a reality.