I’m thrilled to sit down with Christopher Hailstone, a seasoned expert in energy management and renewable energy with a deep understanding of electricity delivery. As our Utilities expert, Christopher offers unparalleled insights into grid reliability and security, making him the perfect person to unpack the complexities of flexible grid connections and innovative capacity solutions for data centers. In this conversation, we explore how these strategies can slash costs, accelerate deployment timelines, and enhance grid efficiency, while also delving into the tools and methodologies shaping the future of energy systems. We’ll also touch on the challenges and opportunities that lie ahead in scaling these approaches for broader impact.

How do flexible grid connections with conditional firm service actually function in real-world scenarios, and what kind of impact do they have on costs?

Thanks for having me, Carlos. Flexible grid connections with conditional firm service are a game-changer because they allow data centers to connect to the grid without requiring the full, guaranteed capacity of traditional firm interconnections 100% of the time. In practice, this means a data center might agree to operate under a 20% conditional firm service, where they can be curtailed during peak demand or grid stress events, reducing the need for massive upfront infrastructure builds. The setup process starts with a detailed assessment of the grid’s transmission capacity at the connection point, followed by negotiations between the utility and the data center on curtailment thresholds and operational flexibility. Then, advanced monitoring and control systems are installed to ensure real-time communication—think of it as a constant dialogue between the grid and the data center to manage load dynamically. I recall a project in the PJM region where this approach was piloted; the report I worked on showed it eliminated the need for 273 MW of new generation additions per gigawatt of demand, translating to savings of $78 million per gigawatt. That’s not just numbers on a page—it’s a tangible relief for utilities and ratepayers, like lifting a heavy weight off everyone’s shoulders.

What’s behind the accelerated timeline for a 500-MW data center using flexible connections and bring-your-own-capacity (BYOC) to become fully operational in just two years?

The speed is really striking, isn’t it? Traditionally, interconnection processes drag on for three to five years due to lengthy studies, permitting, and the construction of new transmission or generation assets. With flexible connections and BYOC, we’re cutting through that red tape by rethinking the entire approach. The key factors are twofold: first, flexible connections reduce the need for extensive grid upgrades by allowing shared capacity under certain conditions, so you’re not waiting on massive capital projects. Second, BYOC lets data centers take responsibility for sourcing their own accredited capacity—think solar, wind, or storage—directly, which streamlines the approval process since they’re not fully reliant on utility-driven builds. The stages typically involve an initial feasibility study, resource procurement by the data center, and then integration with the grid under a tailored agreement. I’ve seen this play out in a project where a tech giant pushed hard for a rapid rollout; their proactive approach to securing resources and agreeing to flexible terms shaved years off the timeline. It felt like watching a relay race where everyone knew their role and passed the baton without a hitch—two years from concept to operation is no small feat.

Can you walk us through how BYOC arrangements work in practice and explain the substantial cost savings they offer?

Absolutely, BYOC—or bring-your-own-capacity—is fascinating because it flips the traditional model on its head. Instead of waiting for utilities to build or allocate capacity, data centers procure their own accredited resources, like renewable energy or storage systems, and offer them into the market to boost supply. The process starts with the data center identifying cost-effective, reliable resources—often through partnerships with renewable developers or direct investments—and ensuring they meet grid accreditation standards. Then, they integrate these resources into the grid under a contractual framework that balances their needs with system reliability. The savings are staggering; the report I contributed to highlighted a reduction of $326 million per gigawatt in system supply costs because you’re not building redundant capacity. I remember working with a utility that was initially skeptical, but when they saw a data center bring in a mix of solar and storage that offset peak demand, it was like a lightbulb moment—everyone realized this could ease rate pressures for other customers while keeping the grid stable. It’s a win-win that feels like finding an unexpected shortcut on a long road trip.

How do modeling tools like integrated systems platforms and generation capacity models help clarify the impacts of these new approaches on grid costs and needs?

Modeling tools are the backbone of understanding complex grid interactions, especially with innovations like flexible connections and BYOC. Platforms like the ones we used in our analysis simulate real-world transmission capacity and flexibility requirements, while generation models assess how much new capacity is truly needed and calculate system-wide cost and emissions impacts. In our work, we applied these tools to transmission data from a utility in the PJM footprint, pairing it with fleet-level modeling to see how data center demand affects the broader system. The process involves inputting detailed grid data, running scenarios—say, with and without flexible connections—and analyzing outcomes like cost savings or capacity reductions. One standout finding was how much conditional firm service cut the need for new builds, saving $78 million per gigawatt; it was surprising to see how small tweaks in operational flexibility yielded such big results. I remember staring at the output charts late one night, coffee in hand, realizing we’d underestimated the ripple effect of these strategies—it was a humbling reminder of how data can reveal hidden opportunities.

In what ways do flexible data centers enhance the efficient use of a utility’s generation fleet, and what does this mean for infrastructure and customers?

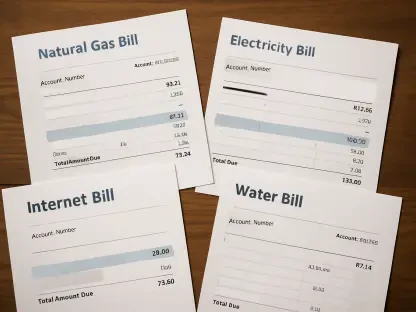

Flexible data centers are a boon for efficiency because they operate at a high load factor, meaning they consume power more consistently than many other customers. This allows utilities to move more megawatt-hours through the same infrastructure without the peaks and valleys that strain the system. On the ground, it means existing generation portfolios—whether gas, solar, or wind—get utilized more effectively, spreading fixed costs over more energy sales. The report I worked on emphasized this point, noting it can ease rate pressure for all customers, which is huge when you think about the rising costs folks face. I recall visiting a control room during a high-demand period and watching operators breathe easier because a nearby data center’s steady load balanced out fluctuations—it was like seeing a tightrope walker find their center. For customers, this translates to more stable bills and for utilities, it’s a chance to delay or avoid costly new builds, maximizing every dollar invested in the grid.

What challenges do you foresee in researching the system-level impacts of BYOC portfolios, especially with cleaner generation, and where should we start digging deeper?

The potential of BYOC with cleaner generation is exciting, but it’s not without hurdles. One major challenge is understanding how multiple BYOC portfolios interact at a system level—will they compete for the same grid access, or could they destabilize supply if not properly coordinated? Another issue is ensuring that cleaner resources like wind or solar, which are intermittent, can reliably meet accreditation standards for data centers with near-constant demand. There’s also the question of equity—how do we ensure smaller players or other customer classes aren’t squeezed out as big data centers dominate capacity markets? I think a good starting point for research is to simulate multi-portfolio scenarios in regions with high data center growth, like PJM, to map out operational conflicts and reliability risks. I remember an early discussion with a colleague about a wind-heavy BYOC portfolio that looked great on paper but struggled during low-wind periods; it made me realize we need robust storage integration studies to backstop these cleaner options. It’s a complex puzzle, but solving it could redefine how we balance growth and sustainability.

What is your forecast for the future of flexible grid connections and BYOC in transforming data center integration with the grid?

Looking ahead, I’m incredibly optimistic about the role of flexible grid connections and BYOC in reshaping how data centers integrate with the grid. I foresee these approaches becoming standard practice within the next decade as utilities and regulators see the cost savings—think hundreds of millions per gigawatt—and the accelerated timelines as non-negotiable for meeting booming demand. The push for cleaner generation will only accelerate this trend, with data centers potentially becoming net contributors to grid decarbonization through BYOC portfolios heavy on renewables and storage. Challenges like system coordination and equitable access will need addressing, but I believe collaborative frameworks between utilities, tech companies, and policymakers will emerge to tackle them. I can almost picture bustling control rooms where data centers aren’t just loads but active partners in grid stability—it’s a future worth building toward, and I think we’re on the cusp of that shift.